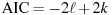

is the

log-likelihood and k is the total number of

parameters estimated. Note that X-12-ARIMA

does not produce information criteria such as AIC when estimation

is by conditional ML.

is the

log-likelihood and k is the total number of

parameters estimated. Note that X-12-ARIMA

does not produce information criteria such as AIC when estimation

is by conditional ML.

See also the Gretl Function Reference.

The following commands are documented below.

Note that brackets "[" and "]" are used to indicate that certain elements of commands are optional. The brackets should not be typed by the user.

| Argument: | varlist |

| Options: | --lm (do an LM test, OLS only) |

| --auto=criterion (forward stepwise, OLS only) | |

| --quiet (print only the basic test result) | |

| --silent (don't print anything) | |

| --vcv (print covariance matrix for augmented model) | |

| --both (IV estimation only, see below) | |

| Examples: | add 5 7 9 |

| add xx yy zz --quiet | |

| add xlist --auto=BIC |

Must be invoked after an estimation command. If neither of the --lm and --auto options are given, this command performs a joint test for the addition of the specified variables to the last model. An augmented version of the original model is estimated, including the variables in varlist, and a Wald test is carried out on the augmented model. The augmented model replaces the original as the "last model" for the purposes of, for example, retrieving the residuals as $uhat or doing further tests. The results of the Wald test may be retrieved using the accessors $test and $pvalue.

The --both option applies only if the last model was estimated via two-stage least squares: it specifies that the new variables should be added to the list of instruments as well as to the list of regressors, the default being to add them to the regressors only.

Given the --lm option (available only for models estimated via OLS), an LM test is performed. An auxiliary regression is run in which the dependent variable is the residual from the last model and the independent variables are those from the last model plus varlist. Under the null hypothesis that the added variables have no additional predictive power, the sample size times the unadjusted R-squared from this regression is distributed as chi-square with degrees of freedom equal to the number of added regressors. Under this option the original model is not replaced.

The --auto option (which cannot be combined with --lm) calls for forward stepwise regression, using the QR algorithm described by Hastie et al (2020). In this case varlist is interpreted as a list of candidates for addition to the original model. At each step the method determines which candidate offers the greatest improvement in fit according to the specified criterion (given as parameter to the option). The algorithm halts when no further improvement is possible. The criterion must take one of these forms:

An Information Criterion: AIC, BIC or HQC. The "best" candidate at each step is then that which gives the greatest improvement (reduction) in the selected criterion.

An α value (positive decimal fraction). In this case the figure of merit is the sum of squared residuals. The algorithm halts when no remaining candidate gives a reduction in SSR that is statistically significant at the α level on a chi-square test.

Menu path: Model window, /Tests/Add variables

| Arguments: | order varlist |

| Options: | --nc (test without a constant) |

| --c (with constant only) | |

| --ct (with constant and trend) | |

| --ctt (with constant, trend and trend squared) | |

| --seasonals (include seasonal dummy variables) | |

| --gls (de-mean or de-trend using GLS) | |

| --verbose (print regression results) | |

| --quiet (suppress printing of results) | |

| --difference (use first difference of variable) | |

| --test-down[=criterion] (automatic lag order) | |

| --perron-qu (see below) | |

| Examples: | adf 0 y |

| adf 2 y --nc --c --ct | |

| adf 12 y --c --test-down | |

| See also jgm-1996.inp |

The options shown above and the discussion which follows mostly pertain to the use of the adf command with regular time series data. For use of this command with panel data please see the section titled "Panel data" below.

This command computes a set of Dickey–Fuller tests on each of the listed variables, the null hypothesis being that the variable in question has a unit root. (But if the --difference flag is given, the first difference of the variable is taken prior to testing, and the discussion below must be taken as referring to the transformed variable.)

By default, two variants of the test are shown: one based on a regression containing a constant and one using a constant and linear trend. You can control the variants that are presented by specifying one or more of the option flags --nc, --c, --ct, --ctt.

The --gls option can be used in conjunction with one or other of the flags --c and --ct. The effect of this option is that the series to be tested is demeaned or detrended using the GLS procedure proposed by Elliott, Rothenberg and Stock (1996), which gives a test of greater power than the standard Dickey–Fuller approach. This option is not compatible with --nc, --ctt or --seasonals.

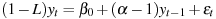

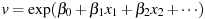

In all cases the dependent variable in the test regression is the first difference of the specified series, y, and the key independent variable is the first lag of y. The regression is constructed such that the coefficient on lagged y equals the root in question, α, minus 1. For example, the model with a constant may be written as

Under the null hypothesis of a unit root the coefficient on lagged y equals zero. Under the alternative that y is stationary this coefficient is negative. So the test is inherently one-sided.

The simplest version of the Dickey–Fuller test assumes that the error term in the test regression is serially uncorrelated. In practice this is unlikely to be the case and the specification is often extended by including one or more lags of the dependent variable, giving an Augmented Dickey–Fuller (ADF) test. The order argument governs the number of such lags, k, possibly depending on the sample size, T.

For a fixed, user-specified k: give a non-negative value for order.

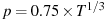

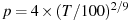

For T-dependent k: give order as –1. The order is then set following the recommendation of Schwert (1989), namely the integer part of 12(T/100)0.25.

In general, however, we don't know how many lags will be required to "whiten" the Dickey–Fuller residual. It's therefore common to specify the maximum value of k and let the data decide the actual number of lags to include. This can be done via the --test-down option. The criterion for selecting optimal k may be set using the parameter to this option, which should be one of AIC, BIC or tstat, AIC being the default.

When testing down via AIC or BIC, the final lag order for the ADF equation is that which optimizes the chosen information criterion (Akaike or Schwarz Bayesian). The exact procedure depends on whether or not the --gls option is given. When GLS is specified, AIC and BIC are the "modified" versions described in Ng and Perron (2001), otherwise they are the standard versions. In the GLS case a refinement is available. If the additional option --perron-qu is given, lag-order selection is performed via the revised method recommended by Perron and Qu (2007). In this case the data are first demeaned or detrended via OLS; GLS is applied once the lag order is determined.

When testing down via the t-statistic method is called for, the procedure is as follows:

Estimate the Dickey–Fuller regression with k lags of the dependent variable.

Is the last lag significant? If so, execute the test with lag order k. Otherwise, let k = k – 1; if k equals 0, execute the test with lag order 0, else go to step 1.

In the context of step 2 above, "significant" means that the t-statistic for the last lag has an asymptotic two-sided p-value, against the normal distribution, of 0.10 or less.

To sum up, if we accept the various arguments of Perron, Ng, Qu and Schwert referenced above, the favored command for testing a series y is likely to be:

adf -1 y --c --gls --test-down --perron-qu

(Or substitute --ct for --c if the series seems to display a trend.) The lag order for the test will then be determined by testing down via modified AIC from the Schwert maximum, with the Perron–Qu refinement.

P-values for the Dickey–Fuller tests are based on response-surface estimates. When GLS is not applied these are taken from MacKinnon (1996). Otherwise they are taken from Cottrell (2015) or, when testing down is performed, Sephton (2021). The P-values are specific to the sample size unless they are labeled as asymptotic.

When the adf command is used with panel data, to produce a panel unit root test, the applicable options and the results shown are somewhat different.

First, while you may give a list of variables for testing in the regular time-series case, with panel data only one variable may be tested per command. Second, the options governing the inclusion of deterministic terms become mutually exclusive: you must choose between no-constant, constant only, and constant plus trend; the default is constant only. In addition, the --seasonals option is not available. Third, the --verbose option has a different meaning: it produces a brief account of the test for each individual time series (the default being to show only the overall result).

The overall test (null hypothesis: the series in question has a unit root for all the panel units) is calculated in one or both of two ways: using the method of Im, Pesaran and Shin (Journal of Econometrics, 2003) or that of Choi (Journal of International Money and Finance, 2001). The Choi test requires that P-values are available for the individual tests; if this is not the case (depending on the options selected) it is omitted. The particular statistic given for the Im, Pesaran, Shin test varies as follows: if the lag order for the test is non-zero their W statistic is shown; otherwise if the time-series lengths differ by individual, their Z statistic; otherwise their t-bar statistic. See also the levinlin command.

Menu path: /Variable/Unit root tests/Augmented Dickey-Fuller test

| Arguments: | response treatment [ block ] |

| Option: | --quiet (don't print results) |

Analysis of Variance: response is a series measuring some effect of interest and treatment must be a discrete variable that codes for two or more types of treatment (or non-treatment). For two-way ANOVA, the block variable (which should also be discrete) codes for the values of some control variable.

Unless the --quiet option is given, this command prints a table showing the sums of squares and mean squares along with an F-test. The F-test and its p-value can be retrieved using the accessors $test and $pvalue respectively.

The null hypothesis for the F-test is that the mean response is invariant with respect to the treatment type, or in words that the treatment has no effect. Strictly speaking, the test is valid only if the variance of the response is the same for all treatment types.

Note that the results shown by this command are in fact a subset of the information given by the following procedure, which is easily implemented in gretl. Create a set of dummy variables coding for all but one of the treatment types. For two-way ANOVA, in addition create a set of dummies coding for all but one of the "blocks". Then regress response on a constant and the dummies using ols. For a one-way design the ANOVA table is printed via the --anova option to ols. In the two-way case the relevant F-test is found by using the omit command. For example (assuming y is the response, xt codes for the treatment, and xb codes for blocks):

# one-way list dxt = dummify(xt) ols y 0 dxt --anova # two-way list dxb = dummify(xb) ols y 0 dxt dxb # test joint significance of dxt omit dxt --quiet

Menu path: /Model/Other linear models/ANOVA

| Argument: | filename |

| Options: | --time-series (see below) |

| --fixed-sample (see below) | |

| --update-overlap (see below) | |

| --quiet (print less confirmation details, see below) | |

| See below for additional specialized options |

Opens a data file and appends the content to the current dataset, if the new data are compatible. The program will try to detect the format of the data file (native, plain text, CSV, Gnumeric, Excel, etc.). Please note that the join command offers much more control over the matching of supplementary data to the current dataset. Also note that appending data to an existing panel dataset is potentially quite tricky; see the section headed "Panel data" below.

The appended data may take the form of either additional observations on series already present in the dataset, and/or new series. In the case of adding series, compatibility requires either (a) that the number of observations for the new data equals that for the current data, or (b) that the new data carries clear observation information so that gretl can work out how to place the values. Note that if there's a "perfect match" of observation information (that is, conditions (a) and (b) are both satisfied), it is assumed that series, rather than observations, are to be added. And if it happens that there are no series names in the file whose data are to be appended that are not already present in the current dataset then nothing is done, and a warning is shown.

One case that is not supported is where the new data start earlier and also end later than the original data. To add new series in such a case you can use the --fixed-sample option; this has the effect of suppressing the adding of observations, and so restricting the operation to the addition of new series.

When a data file is selected for appending, there may be an area of overlap with the existing dataset; that is, one or more series may have one or more observations in common across the two sources. If the option --update-overlap is given, the append operation will replace any overlapping observations with the values from the selected data file, otherwise the values currently in place will be unaffected.

The additional specialized options --sheet, --coloffset, --rowoffset and --fixed-cols work in the same way as with open; see that command for explanations.

By default some information about the appended dataset is printed. The --quiet option reduces that printout to a confirmatory message stating just the path to the file. If you want the operation to be completely silent, then issue the command set verbose off before appending the data, in combination with the --quiet option.

When new data are appended to a panel dataset, the result will be correct only if both the "units" or "individuals" and the time-periods are properly matched.

Two relatively simple cases should be handled correctly by append. Let N denote the number of cross-sectional units and T denote the number of time periods in the current panel, and let m denote the number of observations for the new data. If m = N the new data are taken to be time-invariant, and are copied into place for each time period. On the other hand, if m = T the data are treated as invariant across the panel units, and are copied into place for each unit. If T = N an ambiguity arises. In that case the new data are treated as time-invariant by default, but you can force gretl to treat them as time series (invariant across the units) via the --time-series option.

If the current dataset and the incoming data are both recognized as panel data then appending is supported only if both the T and N dimensions match; otherwise an error is flagged. For more complex cases use join instead of append.

Menu path: /File/Append data

| Arguments: | lags ; depvar indepvars |

| Options: | --vcv (print covariance matrix) |

| --quiet (don't print parameter estimates) | |

| Example: | ar 1 3 4 ; y 0 x1 x2 x3 |

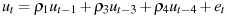

Computes parameter estimates using the generalized Cochrane–Orcutt iterative procedure; see Section 9.5 of Ramanathan (2002). Iteration is terminated when successive error sums of squares do not differ by more than 0.005 percent or after 20 iterations.

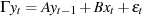

lags is a list of lags in the residuals, terminated by a semicolon. In the above example, the error term is specified as

Menu path: /Model/Univariate time series/AR Errors (GLS)

| Arguments: | depvar indepvars |

| Options: | --hilu (use Hildreth–Lu procedure) |

| --pwe (use Prais–Winsten estimator) | |

| --vcv (print covariance matrix) | |

| --no-corc (do not fine-tune results with Cochrane-Orcutt) | |

| --loose (use looser convergence criterion) | |

| --quiet (don't print anything) | |

| Examples: | ar1 1 0 2 4 6 7 |

| ar1 y 0 xlist --pwe | |

| ar1 y 0 xlist --hilu --no-corc |

Computes feasible GLS estimates for a model in which the error term is assumed to follow a first-order autoregressive process.

The default method is the Cochrane–Orcutt iterative procedure; see for example section 9.4 of Ramanathan (2002). The criterion for convergence is that successive estimates of the autocorrelation coefficient do not differ by more than 1e-6, or if the --loose option is given, by more than 0.001. If this is not achieved within 100 iterations an error is flagged.

If the --pwe option is given, the Prais–Winsten estimator is used. This involves an iteration similar to Cochrane–Orcutt; the difference is that while Cochrane–Orcutt discards the first observation, Prais–Winsten makes use of it. See, for example, Chapter 13 of Greene (2000) for details.

If the --hilu option is given, the Hildreth–Lu search procedure is used. The results are then fine-tuned using the Cochrane–Orcutt method, unless the --no-corc flag is specified. The --no-corc option is ignored for estimators other than Hildreth–Lu.

Menu path: /Model/Univariate time series/AR Errors (GLS)

| Arguments: | order depvar indepvars |

| Option: | --quiet (don't print anything) |

| Example: | arch 4 y 0 x1 x2 x3 |

This command is retained at present for backward compatibility, but you are better off using the maximum likelihood estimator offered by the garch command; for a plain ARCH model, set the first GARCH parameter to 0.

Estimates the given model specification allowing for ARCH (Autoregressive Conditional Heteroskedasticity). The model is first estimated via OLS, then an auxiliary regression is run, in which the squared residual from the first stage is regressed on its own lagged values. The final step is weighted least squares estimation, using as weights the reciprocals of the fitted error variances from the auxiliary regression. (If the predicted variance of any observation in the auxiliary regression is not positive, then the corresponding squared residual is used instead).

The alpha values displayed below the coefficients are the estimated parameters of the ARCH process from the auxiliary regression.

See also garch and modtest (the --arch option).

| Arguments: | p d q [ ; P D Q ] ; depvar [ indepvars ] |

| Options: | --verbose (print details of iterations) |

| --quiet (don't print out results) | |

| --vcv (print covariance matrix) | |

| --hessian (see below) | |

| --opg (see below) | |

| --nc (do not include a constant) | |

| --conditional (use conditional maximum likelihood) | |

| --x-12-arima (use X-12-ARIMA, or X13, for estimation) | |

| --lbfgs (use L-BFGS-B maximizer) | |

| --y-diff-only (ARIMAX special, see below) | |

| --lagselect (see below) | |

| Examples: | arima 1 0 2 ; y |

| arima 2 0 2 ; y 0 x1 x2 --verbose | |

| arima 0 1 1 ; 0 1 1 ; y --nc | |

| See also armaloop.inp, auto_arima.inp, bjg.inp |

Note: arma is an acceptable alias for this command.

If no indepvars list is given, estimates a univariate ARIMA (Autoregressive, Integrated, Moving Average) model. The values p, d and q represent the autoregressive (AR) order, the differencing order, and the moving average (MA) order respectively. These values may be given in numerical form, or as the names of pre-existing scalar variables. A d value of 1, for instance, means that the first difference of the dependent variable should be taken before estimating the ARMA parameters.

If you wish to include only specific AR or MA lags in the model (as opposed to all lags up to a given order) you can substitute for p and/or q either (a) the name of a pre-defined matrix containing a set of integer values or (b) an expression such as {1,4}; that is, a set of lags separated by commas and enclosed in braces.

The optional integer values P, D and Q represent the seasonal AR order, the order for seasonal differencing, and the seasonal MA order, respectively. These are applicable only if the data have a frequency greater than 1 (for example, quarterly or monthly data). These orders may be given in numerical form or as scalar variables.

In the univariate case the default is to include an intercept in the model but this can be suppressed with the --nc flag. If indepvars are added, the model becomes ARMAX; in this case the constant should be included explicitly if you want an intercept (as in the second example above).

An alternative form of syntax is available for this command: if you do not want to apply differencing (either seasonal or non-seasonal), you may omit the d and D fields altogether, rather than explicitly entering 0. In addition, arma is a synonym or alias for arima. Thus for example the following command is a valid way to specify an ARMA(2, 1) model:

arma 2 1 ; y

The default is to use the "native" gretl ARMA functionality, with estimation by exact ML; estimation via conditional ML is available as an option. (If X-12-ARIMA is installed you have the option of using it instead of native code. Note that the newer X13 works as a drop-in replacement in exactly the same way.) For details regarding these options, please see chapter 31 of the Gretl User's Guide.

When native exact ML code is used, estimated standard errors are by default based on a numerical approximation to the (negative inverse of) the Hessian, with a fallback to the outer product of the gradient (OPG) if calculation of the numerical Hessian should fail. Two (mutually exclusive) option flags can be used to force the issue: the --opg option forces use of the OPG method, with no attempt to compute the Hessian, while the --hessian flag disables the fallback to OPG. Note that failure of the numerical Hessian computation is generally an indicator of a misspecified model.

The option --lbfgs is specific to estimation using native ARMA code and exact ML: it calls for use of the "limited memory" L-BFGS-B algorithm in place of the regular BFGS maximizer. This may help in some instances where convergence is difficult to achieve.

The option --y-diff-only is specific to estimation of ARIMAX models (models with a non-zero order of integration and including exogenous regressors), and applies only when gretl's native exact ML is used. For such models the default behavior is to difference both the dependent variable and the regressors, but when this option is specified only the dependent variable is differenced, the regressors remaining in level form.

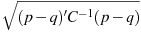

The AIC value given in connection with ARIMA models is calculated according to the definition used in X-12-ARIMA, namely

where

is the

log-likelihood and k is the total number of

parameters estimated. Note that X-12-ARIMA

does not produce information criteria such as AIC when estimation

is by conditional ML.

is the

log-likelihood and k is the total number of

parameters estimated. Note that X-12-ARIMA

does not produce information criteria such as AIC when estimation

is by conditional ML.

The AR and MA roots shown in connection with ARMA estimation are based on the following representation of an ARMA(p, q) process:

(1 - a_1*L - a_2*L^2 - ... - a_p*L^p)Y =

c + (1 + b_1*L + b_2*L^2 + ... + b_q*L^q) e_t

The AR roots are therefore the solutions to

1 - a_1*z - a_2*z^2 - ... - a_p*L^p = 0

and stability requires that these roots lie outside the unit circle.

The "frequency" figure printed in connection with AR and MA roots is the λ value that solves z = r * exp(i*2*π*λ) where z is the root in question and r is its modulus.

When the --lagselect option is given, this command does not give specific estimates, but instead produces a table showing information criteria and log-likelihood for a number of ARMA or ARIMA specifications. The lag orders p and q are taken as maxima; and if a seasonal specification is provided P and Q are also taken as maxima. In each case the minimum order is taken to be 0, and results are shown for all specifications from mimima to maxima. The degrees of differencing in the command, d and/or D, are respected but not treated as subject to search. A matrix holding the results is available via the $test accessor.

Menu path: /Model/Univariate time series/ARIMA

See arima; arma is an alias.

| Arguments: | order x |

| Options: | --corr1=rho (see below) |

| --sdcrit=multiple (see below) | |

| --boot=N (see below) | |

| --matrix=m (use matrix input) | |

| --quiet (suppress printing of results) | |

| Examples: | bds 5 x |

| bds 3 --matrix=m | |

| bds 4 --sdcrit=2.0 |

Performs the BDS (Brock, Dechert, Scheinkman and LeBaron, 1996) test for nonlinearity of the series x. In an econometric context this is typically used to test a regression residual for violation of the IID condition. The test is based on a set of correlation integrals, designed to detect nonlinearity of progressively higher dimensionality, and the order argument sets the number of such integrals. This must be at least 2; the first integral establishes a baseline but does not support a test. The BDS test is of the portmanteau type: able to detect all manner of departures from linearity but not informative about how exactly the condition was violated.

Instead of giving x as a series, the --matrix option can be used to specify a matrix as input. The matrix must be a vector (column or row).

The correlation integrals are based on a measure of "closeness" of data points, where two points are considered close if they lie within ε of each other. The test requires a specification of ε. By default gretl follows the recommendation of Kanzler (1999): ε is chosen such that the first-order correlation integral is around 0.7. A common alternative (requiring less computation) is to specify ε as a multiple of the standard deviation of the target series. The --sdcrit option supports the latter method; in the third example above ε is set to twice the standard deviation of x. The --corr1 option implies use of Kanzler's method but allows for a target correlation other than 0.7. It should be clear that these two options are mutually exclusive.

BDS test statistics are asymptotically distributed as N(0,1) but the test over-rejects quite markedly in small to moderate-sized samples. For that reason P-values are by default obtained via bootstrapping when x is of length less than 600 (but by reference to the normal distribution otherwise). If you want to use the bootstrap for larger samples you can force the issue by giving a non-zero value for the --boot option, Conversely, if you don't want bootstrapping for smaller samples, give a zero value for --boot.

When bootstrapping is performed the default number of iterations is 1999, but you can specify a different number by giving a value greater than 1 with --boot.

On successful completion of this command, $result retrieves the test results in the form of a matrix with two rows and order – 1 columns. The first row contains test statistics and the second P-values for each of the per-dimension tests under the null that x is linear/IID.

| Arguments: | depvar1 depvar2 indepvars1 [ ; indepvars2 ] |

| Options: | --vcv (print covariance matrix) |

| --robust (robust standard errors) | |

| --cluster=clustvar (see logit for explanation) | |

| --opg (see below) | |

| --save-xbeta (see below) | |

| --verbose (print extra information) | |

| Examples: | biprobit y1 y2 0 x1 x2 |

| biprobit y1 y2 0 x11 x12 ; 0 x21 x22 | |

| See also biprobit.inp |

Estimates a bivariate probit model, using the Newton–Raphson method to maximize the likelihood.

The argument list starts with the two (binary) dependent variables, followed by a list of regressors. If a second list is given, separated by a semicolon, this is interpreted as a set of regressors specific to the second equation, with indepvars1 being specific to the first equation; otherwise indepvars1 is taken to represent a common set of regressors.

By default, standard errors are computed using the analytical Hessian at convergence. But if the --opg option is given the covariance matrix is based on the Outer Product of the Gradient (OPG), or if the --robust option is given QML standard errors are calculated, using a "sandwich" of the inverse of the Hessian and the OPG.

Note that the estimate of rho, the correlation of the error terms across the two equations, is included in the coefficient vector; it's the last element in the accessors coeff, stderr and vcv.

After successful estimation, the accessor $uhat retrieves a matrix with two columns holding the generalized residuals for the two equations; that is, the expected values of the disturbances conditional on the observed outcomes and covariates. By default $yhat retrieves a matrix with four columns, holding the estimated probabilities of the four possible joint outcomes for (y1, y2), in the order (1,1), (1,0), (0,1), (0,0). Alternatively, if the option --save-xbeta is given, $yhat has two columns and holds the values of the index functions for the respective equations.

The output includes a test of the null hypothesis that the disturbances in the two equations are uncorrelated. This is a likelihood ratio test unless the QML variance estimator is requested, in which case it's a Wald test.

| Option: | --quiet (don't print anything) |

| Examples: | longley.inp |

Must follow the estimation of a model which includes at least two independent variables. Calculates and displays diagnostic information pertaining to collinearity, namely the BKW Table, based on the work of Belsley, Kuh and Welsch (1980). This table presents a sophisticated analysis of the degree and sources of collinearity, via eigenanalysis of the inverse correlation matrix. For a thorough account of the BKW approach with reference to gretl, and with several examples, see Adkins, Waters and Hill (2015).

Following this command the $result accessor may be used to retrieve the BKW table as a matrix. See also the vif command for a simpler approach to diagnosing collinearity.

There is also a function named bkw which offers greater flexibility.

Menu path: Model window, /Analysis/Collinearity

| Argument: | varlist |

| Options: | --notches (show 90 percent interval for median) |

| --factorized (see below) | |

| --panel (see below) | |

| --matrix=name (plot columns of named matrix) | |

| --output=filename (send output to specified file) |

These plots display the distribution of a variable. The central box encloses the middle 50 percent of the data, i.e. it is bounded by the first and third quartiles. The "whiskers" extend from each end of the box for a range equal to 1.5 times the interquartile range. Observations outside that range are considered outliers and represented via dots. A line is drawn across the box at the median. A "+" sign is used to indicate the mean. If the option of showing a confidence interval for the median is selected, this is computed via the bootstrap method and shown in the form of dashed horizontal lines above and/or below the median.

The --factorized option allows you to examine the distribution of a chosen variable conditional on the value of some discrete factor. For example, if a data set contains wages and a gender dummy variable you can select the wage variable as the target and gender as the factor, to see side-by-side boxplots of male and female wages, as in

boxplot wage gender --factorized

Note that in this case you must specify exactly two variables, with the factor given second.

If the current data set is a panel, and just one variable is specified, the --panel option produces a series of side-by-side boxplots, one for each panel "unit" or group.

Generally, the argument varlist is required, and refers to one or more series in the current dataset (given either by name or ID number). But if a named matrix is supplied via the --matrix option this argument becomes optional: by default a plot is drawn for each column of the specified matrix.

Gretl's boxplots are generated using gnuplot, and it is possible to specify the plot more fully by appending additional gnuplot commands, enclosed in braces. For details, please see the help for the gnuplot command.

In interactive mode the result is displayed immediately. In batch mode the default behavior is that a gnuplot command file is written in the user's working directory, with a name on the pattern gpttmpN.plt, starting with N = 01. The actual plots may be generated later using gnuplot (under MS Windows, wgnuplot). This behavior can be modified by use of the --output=filename option. For details, please see the gnuplot command.

Menu path: /View/Graph specified vars/Boxplots

Break out of a loop. This command can be used only within a loop; it causes command execution to break out of the current (innermost) loop. See also loop, continue.

| Syntax: | catch command |

This is not a command in its own right but can be used as a prefix to most regular commands: the effect is to prevent termination of a script if an error occurs in executing the command. If an error does occur, this is registered in an internal error code which can be accessed as $error (a zero value indicates success). The value of $error should always be checked immediately after using catch, and appropriate action taken if the command failed.

The catch keyword cannot be used before if, elif or endif. In addition it should not be used on calls to user-defined functions; it is intended for use only with gretl commands and calls to "built-in" functions or operators. Furthermore, catch cannot be used in conjunction with "back-arrow" assignment of models or plots to session icons (see chapter 3 of the Gretl User's Guide).

| Variants: | chow obs |

| chow dummyvar --dummy | |

| Options: | --dummy (use a pre-existing dummy variable) |

| --quiet (don't print estimates for augmented model) | |

| --limit-to=list (limit test to subset of regressors) | |

| Examples: | chow 25 |

| chow 1988:1 | |

| chow female --dummy |

Must follow an OLS regression. If an observation number or date is given, provides a test for the null hypothesis of no structural break at the given split point. The procedure is to create a dummy variable which equals 1 from the split point specified by obs to the end of the sample, 0 otherwise, and also interaction terms between this dummy and the original regressors. If a dummy variable is given, tests the null hypothesis of structural homogeneity with respect to that dummy. Again, interaction terms are added. In either case an augmented regression is run including the additional terms.

By default an F statistic is calculated, taking the augmented regression as the unrestricted model and the original as the restricted. But if the original model used a robust estimator for the covariance matrix, the test statistic is a Wald chi-square value based on a robust estimator of the covariance matrix for the augmented regression.

The --limit-to option can be used to limit the set of interactions with the split dummy variable to a subset of the original regressors. The parameter for this option must be a named list, all of whose members are among the original regressors. The list should not include the constant.

Menu path: Model window, /Tests/Chow test

| Options: | --dataset (clear dataset only) |

| --functions (clear functions (only)) | |

| --all (clear everything) |

By default this command clears the current dataset (if any) plus all saved variables (scalars, matrices, etc.) out of memory. Note that opening a new dataset, or using the nulldata command to create an empty dataset, also has this effect, so explicit use of clear is not usually necessary.

If the --dataset option is given, then only the dataset is cleared (plus any named lists of series); other saved objects such as matrices, scalars and bundles are preserved.

If the --functions option is given, then any user-defined functions, and any functions defined by packages that have been loaded, are cleared out of memory. The dataset and other variables are not affected.

If the --all option is given, clearing is comprehensive: the dataset, saved variables of all kinds, plus user-defined and packaged functions.

| Argument: | varlist |

| Option: | --quiet (don't print anything) |

| Examples: | coeffsum xt xt_1 xr_2 |

| See also restrict.inp |

Must follow a regression. Calculates the sum of the coefficients on the variables in varlist. Prints this sum along with its standard error and the p-value for the null hypothesis that the sum is zero.

Note the difference between this and omit, which tests the null hypothesis that the coefficients on a specified subset of independent variables are all equal to zero.

The --quiet option may be useful if one just wants access to the $test and $pvalue values that are recorded on successful completion.

Menu path: Model window, /Tests/Sum of coefficients

| Arguments: | order depvar indepvars |

| Options: | --nc (do not include a constant) |

| --ct (include constant and trend) | |

| --ctt (include constant and quadratic trend) | |

| --seasonals (include seasonal dummy variables) | |

| --skip-df (no DF tests on individual variables) | |

| --test-down[=criterion] (automatic lag order) | |

| --verbose (print extra details of regressions) | |

| --silent (don't print anything) | |

| Examples: | coint 4 y x1 x2 |

| coint 0 y x1 x2 --ct --skip-df |

The Engle–Granger (1987) cointegration test. The default procedure is: (1) carry out Dickey–Fuller tests on the null hypothesis that each of the variables listed has a unit root; (2) estimate the cointegrating regression; and (3) run a DF test on the residuals from the cointegrating regression. If the --skip-df flag is given, step (1) is omitted.

If the specified lag order is positive all the Dickey–Fuller tests use that order, with this qualification: if the --test-down option is given, the given value is taken as the maximum and the actual lag order used in each case is obtained by testing down. See the adf command for details of this procedure.

By default, the cointegrating regression contains a constant. If you wish to suppress the constant, add the --nc flag. If you wish to augment the list of deterministic terms in the cointegrating regression with a linear or quadratic trend, add the --ct or --ctt flag. These option flags are mutually exclusive. You also have the option of adding seasonal dummy variables (in the case of quarterly or monthly data).

P-values for this test are based on MacKinnon (1996). The relevant code is included by kind permission of the author.

For the cointegration tests due to Søren Johansen, see johansen.

Menu path: /Model/Multivariate time series

This command can be used only within a loop; it has the effect of skipping the subsequent statements within the current iteration of the current (innermost) loop. See also loop, break

| Variants: | corr [ varlist ] |

| corr --matrix=matname | |

| Options: | --uniform (ensure uniform sample) |

| --spearman (Spearman's rho) | |

| --kendall (Kendall's tau) | |

| --verbose (print rankings) | |

| --plot=mode-or-filename (see below) | |

| --triangle (only plot lower half, see below) | |

| --quiet (do not print anything) | |

| Examples: | corr y x1 x2 x3 |

| corr ylist --uniform | |

| corr x y --spearman | |

| corr --matrix=X --plot=display |

By default, prints the pairwise correlation coefficients (Pearson's product-moment correlation) for the variables in varlist, or for all variables in the data set if varlist is not given. The standard behavior is to use all available observations for computing each pairwise coefficient, but if the --uniform option is given the sample is limited (if necessary) so that the same set of observations is used for all the coefficients. This option has an effect only if there are differing numbers of missing values for the variables used.

The (mutually exclusive) options --spearman and --kendall produce, respectively, Spearman's rank correlation rho and Kendall's rank correlation tau in place of the default Pearson coefficient. When either of these options is given, varlist should contain just two variables.

When a rank correlation is computed, the --verbose option can be used to print the original and ranked data (otherwise this option is ignored).

If varlist contains more than two series and gretl is not in batch mode, a "heatmap" plot of the correlation matrix is shown. This can be adjusted via the --plot option. The acceptable parameters to this option are none (to suppress the plot); display (to display a plot even when in batch mode); or a file name. The effect of providing a file name is as described for the --output option of the gnuplot command. When plotting is active the option --triangle can be used to show only the lower triangle of the matrix plot.

If the alternative form is given, using a named matrix rather than a list of series, the --spearman and --kendall options are not available—but see the npcorr function.

The $result accessor can be used to obtain the correlations as a matrix. Note that if it's this matrix that's of interest, not just the pairwise coefficients, then in the presence of missing values it's advisable to use the --uniform option. Unless a single, common sample is used it is not guaranteed that the correlation matrix will be positive semidefinite, as it ought to be by construction.

Menu path: /View/Correlation matrix

Other access: Main window pop-up menu (multiple selection)| Arguments: | y [ order ] |

| Options: | --plot=mode-or-filename (see below) |

| --quiet (don't print anything) | |

| --acf-only (omit partial autocorrelations) | |

| --bartlett (use Bartlett standard errors) | |

| Examples: | corrgm x 12 |

| corrgm GDP 12 --acf-only |

Prints and/or graphs the values of the autocorrelation function (ACF) for the series y, which may be specified by name or number. The values are defined as ρ(ut, ut-s) where ut is the tth observation of the variable u and s denotes the number of lags.

Unless the --acf-only option is given, partial autocorrelations (PACF, calculated using the Durbin–Levinson algorithm) are also shown: these are net of the effects of intervening lags.

Asterisks are used to indicate statistical significance of the individual autocorrelations. By default this is assessed using a standard error of one over the square root of the sample size, but if the --bartlett option is given then Bartlett standard errors are used for the ACF; see below for details. This option also governs the confidence band drawn in the ACF plot, if applicable. In addition the Ljung–Box Q statistic is shown; this tests the null that the series is "white noise" up to the given lag.

If an order value is specified the length of the correlogram is limited to at most that number of lags, otherwise the length is determined automatically, as a function of the frequency of the data and the number of observations.

Please note that in this context Bartlett standard errors are not just a robust variant of the default ones. The default standard errors pertain to the null hypothesis that the series in question is white noise; roughly speaking, this is not rejected at the 5 percent level if no more than 5 percent of the ACF values exceed the bounds set at 1.96 times the standard error. The Bartlett values, however, are associated with the hypothesis that the series follows a moving average process. Specifically, if the ACF values fall within the Bartlett bounds for lags greater than k, we fail to reject the null that y is MA(k). See chapter 7 of Brockwell and Davis (1987) for more on this point.

By default, if gretl is not in batch mode a plot of the correlogram is shown. This can be adjusted via the --plot option. The acceptable parameters to this option are none (to suppress the plot); display (to show a plot even when in batch mode); or a file name. The effect of providing a file name is as described for the --output option of the gnuplot command.

Upon successful completion, the accessors $test and $pvalue can be used to retrieve the Q statistic and its P-value, evaluated at the maximum lag. Note that if you just want this test you can use use the ljungbox function instead.

Menu path: /Variable/Correlogram

Other access: Main window pop-up menu (single selection)| Options: | --squares (perform the CUSUMSQ test) |

| --quiet (just print the Harvey–Collier test) | |

| --plot=mode-or-filename (see below) |

Must follow the estimation of a model via OLS. Performs the CUSUM test—or if the --squares option is given, the CUSUMSQ test—for parameter stability. A series of one-step ahead forecast errors is obtained by running a series of regressions: the first regression uses the first k observations and is used to generate a prediction of the dependent variable at observation k + 1; the second uses the first k + 1 observations and generates a prediction for observation k + 2, and so on (where k is the number of parameters in the original model).

The cumulated sum of the scaled forecast errors, or the squares of these errors, is printed. The null hypothesis of parameter stability is rejected at the 5 percent significance level if the cumulated sum strays outside of the 95 percent confidence band.

In the case of the CUSUM test, the Harvey–Collier t-statistic for testing the null hypothesis of parameter stability is also printed. See Greene's Econometric Analysis for details. For the CUSUMSQ test, the 95 percent confidence band is calculated using the algorithm given in Edgerton and Wells (1994).

By default, if gretl is not in batch mode a plot of the cumulated series and confidence band is shown. This can be adjusted via the --plot option. The acceptable parameters to this option are none (to suppress the plot); display (to display a plot even when in batch mode); or a file name. The effect of providing a file name is as described for the --output option of the gnuplot command.

Menu path: Model window, /Tests/CUSUM(SQ)

| Argument: | varlist |

| Options: | --compact=method (specify compaction method) |

| --quiet (don't report results except on error) | |

| --name=identifier (rename imported series) | |

| --odbc (import from ODBC database) | |

| --no-align (ODBC-specific, see below) |

Reads the variables in varlist from a database file (native gretl, RATS 4.0 or PcGive), which must have been opened previously using the open command. The data command can also be used to import series from DB.NOMICS or from an ODBC database; for details on those variants see gretl + DB.NOMICS or chapter 43 of the Gretl User's Guide, respectively.

The data frequency and sample range may be established via the setobs and smpl commands prior to using this command. Here's an example:

open fedstl.bin setobs 12 2000:01 smpl ; 2019:12 data unrate cpiaucsl

The commands above open the database named fedstl.bin (which is supplied with gretl), establish a monthly dataset starting in January 2000 and ending in December of 2019, and then import the series named unrate (unemployment rate) and cpiaucsl (all-items CPI).

If setobs and smpl are not specified in this way, the data frequency and sample range are set using the first variable read from the database.

If the series to be read are of higher frequency than the working dataset, you may specify a compaction method as below:

data LHUR PUNEW --compact=average

The five available compaction methods are "average" (takes the mean of the high frequency observations), "last" (uses the last observation), "first", "sum" and "spread". If no method is specified, the default is to use the average. The "spread" method is special: no information is lost, rather it is spread across multiple series, one per sub-period. So for example when adding a monthly series to a quarterly dataset three series are created, one for each month of the quarter; their names bear the suffixes m01, m02 and m03.

If the series to be read are of lower frequency than the working dataset the values of the added data are simply repeated as required, but note that the tdisagg function can then be used to distribution or interpolation ("temporal disaggregation").

In the case of native gretl databases (only), the "glob" characters * and ? can be used in varlist to import series that match the given pattern. For example, the following will import all series in the database whose names begin with cpi:

data cpi*

The --name option can be used to set a name for the imported series other than the original name in the database. The parameter must be a valid gretl identifier. This option is restricted to the case where a single series is specified for importation.

The --no-align option applies only to importation of series via ODBC. By default we require that the ODBC query returns information telling gretl on which rows of the dataset to place the incoming data—or at least that the number of incoming values matches either the length of the dataset or the length of the current sample range. Setting the --no-align option relaxes this requirement: failing the conditions just mentioned, incoming values are simply placed consecutively starting at the first row of the dataset. If there are fewer such values than rows in the dataset the trailing rows are filled with NAs; if there are more such values than rows the extra values are discarded. For more on ODBC importation see chapter 43 of the Gretl User's Guide.

Menu path: /File/Databases

| Arguments: | keyword parameters |

| Option: | --panel-time (see addobs below) |

| Examples: | dataset addobs 24 |

| dataset addobs 2 --panel-time | |

| dataset insobs 10 | |

| dataset compact 1 | |

| dataset compact 4 last | |

| dataset expand | |

| dataset transpose | |

| dataset sortby x1 | |

| dataset resample 500 | |

| dataset renumber x 4 | |

| dataset pad-daily 7 | |

| dataset unpad-daily | |

| dataset clear |

Performs various operations on the data set as a whole, depending on the given keyword, which must be addobs, insobs, clear, compact, expand, transpose, sortby, dsortby, resample, renumber, pad-daily or unpad-daily. Note: with the exception of clear, these actions are not available when the dataset is currently subsampled by selection of cases on some Boolean criterion.

addobs: Must be followed by a positive integer, call it n. Adds n extra observations to the end of the working dataset. This is primarily intended for forecasting purposes. The values of most variables over the additional range will be set to missing, but certain deterministic variables are recognized and extended, namely, a simple linear trend and periodic dummy variables. If the dataset takes the form of a panel, the default action is to add n cross-sectional units to the panel, but if the --panel-time flag is given the effect is to add n observations to the time series for each unit.

insobs: Must be followed by a positive integer no greater than the current number of observations. Inserts a single observation at the specified position. All subsequent data are shifted by one place and the dataset is extended by one observation. All variables apart from the constant are given missing values at the new observation. This action is not available for panel datasets.

clear: No parameter required. Clears out the current data, returning gretl to its initial "empty" state.

compact: This action is available for time series data only; it compacts all the series in the data set to a lower frequency. It requires one parameter, a positive integer representing the new frequency. In general this should be lower than the current frequency (for example, a value of 4 when the current frequency is 12 indicates compaction from monthly to quarterly). The one exception is a new frequency of 52 (weekly) when the current data are daily (frequency 5, 6 or 7). A second parameter may be given, namely one of sum, first, last or spread, to specify, respectively, compaction using the sum of the higher-frequency values, start-of-period values, end-of-period values, or spreading of the higher-frequency values across multiple series (one per sub-period). The default is to compact by averaging.

In the case of compaction from daily to weekly frequency (only), the two special options --repday and --weekstart are available. The first of these allows you to select a "representative day" of the week to serve as the weekly value. The parameter to this option must be an integer from 0 (Sunday) to 6 (Saturday). For example, giving --repday=3 selects Wednesday's value as the weekly value. If the --repday option is not given, we need to know on which day the week is deemed to start in order to align the data correctly. For 5- or 6-day data this is always taken to be Monday, but with 7-day data you have a choice between --weekstart=0 (Sunday) and --weekstart=1 (Monday), with Monday being the default.

expand: This action is only available for annual or quarterly time series data: annual data can be expanded to quarterly or monthly, and quarterly data to monthly. All series in the data set are padded out to the new frequency by repeating the existing values. If the original dataset is annual the default expansion is to quarterly but expand can be followed by 12 to request monthly. See the tdisagg function for more sophisticated means of converting data to higher frequency.

transpose: No additional parameter required. Transposes the current data set. That is, each observation (row) in the current data set will be treated as a variable (column), and each variable as an observation. This action may be useful if data have been read from some external source in which the rows of the data table represent variables.

sortby: The name of a single series or list is required. If one series is given, the observations on all variables in the dataset are re-ordered by increasing value of the specified series. If a list is given, the sort proceeds hierarchically: if the observations are tied in sort order with respect to the first key variable then the second key is used to break the tie, and so on until the tie is broken or the keys are exhausted. Note that this action is available only for undated data.

dsortby: Works as sortby except that the re-ordering is by decreasing value of the key series.

resample: Constructs a new dataset by random sampling, with replacement, of the rows of the current dataset. One argument is required, namely the number of rows to include. This may be less than, equal to, or greater than the number of observations in the original data. The original dataset can be retrieved via the command smpl full.

renumber: Requires the name of an existing series followed by an integer between 1 and the number of series in the dataset minus one. Moves the specified series to the specified position in the dataset, renumbering the other series accordingly. (Position 0 is occupied by the constant, which cannot be moved.)

pad-daily: Valid only if the current dataset contains dated daily data with an incomplete calendar. The effect is to pad the data out to a complete calendar by inserting blank rows (that is, rows containing nothing but NAs). This option requires an integer parameter, namely the number of days per week, which must be 5, 6 or 7, and must be greater than or equal to the current data frequency. On successful completion, the data calendar will be "complete" relative to this value. For example if days-per-week is 5 then all weekdays will be represented, whether or not any data are available for those days.

unpad-daily: Valid only if the current dataset contains dated daily data, in which case it performs the inverse operation of pad-daily. That is, any rows that contain nothing but NAs are removed, while the time-series property of the dataset is preserved along with the dates of the individual observations.

Menu path: /Data

| Variants: | delete varlist |

| delete varname | |

| delete --type=type-name | |

| delete pkgname | |

| Options: | --db (delete series from database) |

| --force (see below) |

This command is an all-purpose destructor. It should be used with caution; no confirmation is asked.

In the first form above, varlist is a list of series, given by name or ID number. Note that when you delete series any series with higher ID numbers than those on the deletion list will be re-numbered. If the --db option is given, this command deletes the listed series not from the current dataset but from a gretl database, assuming that a database has been opened, and the user has write permission for file in question. See also the open command.

In the second form, the name of a scalar, matrix, string or bundle may be given for deletion. The --db option is not applicable in this case. Note that series and variables of other types should not be mixed in a given call to delete.

In the third form, the --type option must be accompanied by one of the following type-names: matrix, bundle, string, list, scalar or array. The effect is to delete all variables of the given type. In this case no argument other than the option should be given.

The fourth form can be used to unload a function package. In this case the .gfn suffix must be supplied, as in

delete somepkg.gfn

Note that this does not delete the package file, it just unloads the package from memory.

In general it is not permitted to delete variables in the context of a loop, since this may threaten the integrity of the loop code. However, if you are confident that deleting a certain variable is safe you can override this prohibition by appending the --force flag to the delete command.

Menu path: Main window pop-up (single selection)

| Argument: | varlist |

| Examples: | penngrow.inp, sw_ch12.inp, sw_ch14.inp |

The first difference of each variable in varlist is obtained and the result stored in a new variable with the prefix d_. Thus diff x y creates the new variables

d_x = x(t) - x(t-1) d_y = y(t) - y(t-1)

Menu path: /Add/First differences of selected variables

| Variants: | difftest x y |

| difftest x --split-by=dummy | |

| Options: | --sign (Sign test, the default) |

| --rank-sum (Wilcoxon rank-sum test) | |

| --signed-rank (Wilcoxon signed-rank test) | |

| --verbose (print extra output) | |

| --quiet (suppress printed output) | |

| Examples: | ooballot.inp |

Carries out a nonparametric test for a difference between two samples, the specific test depending on the option selected. In the primary form the samples are represented by the series x and y. In the alternate form, with the --split-by option, the samples are two subsets of the series x, for which the series dummy takes the values 0 and 1, respectively. The alternate form is available only in conjunction with the --rank-sum option, since the other tests require paired observations.

With the --sign option, the Sign test is performed. This test is based on the fact that if two samples, x and y, are drawn randomly from the same distribution, the probability that xi > yi, for each observation i, should equal 0.5. The test statistic is w, the number of observations for which xi > yi. Under the null hypothesis this follows the Binomial distribution with parameters (n, 0.5), where n is the number of observations.

With the --rank-sum option, the Wilcoxon rank-sum test is performed. This test proceeds by ranking the observations from both samples jointly, from smallest to largest, then finding the sum of the ranks of the observations from one of the samples. The two samples do not have to be of the same size, and if they differ the smaller sample is used in calculating the rank-sum. Under the null hypothesis that the samples are drawn from populations with the same median, the probability distribution of the rank-sum can be computed for any given sample sizes; and for reasonably large samples a close Normal approximation exists.

With the --signed-rank option, the Wilcoxon signed-rank test is performed. This is designed for matched data pairs such as, for example, the values of a variable for a sample of individuals before and after some treatment. The test proceeds by finding the differences between the paired observations, xi – yi, ranking these differences by absolute value, then assigning to each pair a signed rank, the sign agreeing with the sign of the difference. One then calculates W+, the sum of the positive signed ranks. As with the rank-sum test, this statistic has a well-defined distribution under the null that the median difference is zero, which converges to the Normal for samples of reasonable size.

For the Wilcoxon tests, if the --verbose option is given then the ranking is printed. (This option has no effect if the Sign test is selected.)

On successful completion the accessors $test and $pvalue are available. If one just wants to obtain these values the --quiet flag can be appended to the command.

| Argument: | varlist |

| Option: | --reverse (mark variables as continuous) |

| Examples: | ooballot.inp, oprobit.inp |

Marks each variable in varlist as being discrete. By default all variables are treated as continuous; marking a variable as discrete affects the way the variable is handled in frequency plots, and also allows you to select the variable for the command dummify.

If the --reverse flag is given, the operation is reversed; that is, the variables in varlist are marked as being continuous.

Menu path: /Variable/Edit attributes

| Argument: | p ; depvar indepvars [ ; instruments ] |

| Options: | --quiet (don't show estimated model) |

| --vcv (print covariance matrix) | |

| --two-step (perform 2-step GMM estimation) | |

| --system (add equations in levels) | |

| --collapse (see below) | |

| --time-dummies (add time dummy variables) | |

| --dpdstyle (emulate DPD package for Ox) | |

| --asymptotic (uncorrected asymptotic standard errors) | |

| --keep-extra (see below) | |

| Examples: | dpanel 2 ; y x1 x2 |

| dpanel 2 ; y x1 x2 --system | |

| dpanel {2 3} ; y x1 x2 ; x1 | |

| dpanel 1 ; y x1 x2 ; x1 GMM(x2,2,3) | |

| See also bbond98.inp |

Carries out estimation of dynamic panel data models (that is, panel models including one or more lags of the dependent variable) using either the GMM-DIF or GMM-SYS method.

The parameter p represents the order of the autoregression for the dependent variable. In the simplest case this is a scalar value, but a pre-defined matrix may be given for this argument, to specify a set of (possibly non-contiguous) lags to be used.

The dependent variable and regressors should be given in levels form; they will be differenced automatically (since this estimator uses differencing to cancel out the individual effects).

The last (optional) field in the command is for specifying instruments. If no instruments are given, it is assumed that all the independent variables are strictly exogenous. If you specify any instruments, you should include in the list any strictly exogenous independent variables. For predetermined regressors, you can use the GMM function to include a specified range of lags in block-diagonal fashion. This is illustrated in the third example above. The first argument to GMM is the name of the variable in question, the second is the minimum lag to be used as an instrument, and the third is the maximum lag. The same syntax can be used with the GMMlevel function to specify GMM-type instruments for the equations in levels.

The --collapse option can be used to limit the proliferation of "GMM-style" instruments, which can be a problem with this estimator. Its effect is to reduce such instruments from one per lag per observation to one per lag.

By default the results of 1-step estimation are reported (with robust standard errors). You may select 2-step estimation as an option. In both cases tests for autocorrelation of orders 1 and 2 are provided, as well as Sargan and/or Hansen overidentification tests and a Wald test for the joint significance of the regressors. Note that in this differenced model first-order autocorrelation is not a threat to the validity of the model, but second-order autocorrelation violates the maintained statistical assumptions.

In the case of 2-step estimation, standard errors are by default computed using the finite-sample correction suggested by Windmeijer (2005). The standard asymptotic standard errors associated with the 2-step estimator are generally reckoned to be an unreliable guide to inference, but if for some reason you want to see them you can use the --asymptotic option to turn off the Windmeijer correction.

If the --time-dummies option is given, a set of time dummy variables is added to the specified regressors. The number of dummies is one less than the maximum number of periods used in estimation, to avoid perfect collinearity with the constant. The dummies are entered in differenced form unless the --dpdstyle option is given, in which case they are entered in levels.

As with other estimation commands, a $model bundle is available after estimation. In the case of dpanel, the --keep-extra option can be used to save additional information in this bundle, namely the GMM weight and instrument matrices.

For further details and examples, please see chapter 24 of the Gretl User's Guide.

Menu path: /Model/Panel/Dynamic panel model

| Argument: | varlist |

| Options: | --drop-first (omit lowest value from encoding) |

| --drop-last (omit highest value from encoding) |

For any suitable variables in varlist, creates a set of dummy variables coding for the distinct values of that variable. Suitable variables are those that have been explicitly marked as discrete, or those that take on a fairly small number of values all of which are "fairly round" (multiples of 0.25).

By default a dummy variable is added for each distinct value of the variable in question. For example if a discrete variable x has 5 distinct values, 5 dummy variables will be added to the data set, with names Dx_1, Dx_2 and so on. The first dummy variable will have value 1 for observations where x takes on its smallest value, 0 otherwise; the next dummy will have value 1 when x takes on its second-smallest value, and so on. If one of the option flags --drop-first or --drop-last is added, then either the lowest or the highest value of each variable is omitted from the encoding (which may be useful for avoiding the "dummy variable trap").

There is also a corresponding function for this command, see dummify. This makes it possible to embed the call directly in a regression specification. For example, the following line specifies a model where y is regressed on the set of dummy variables coding for x. (However, option flags are for the command variant only, not for the function variant of dummify.)

ols y dummify(x)Other access: Main window pop-up menu (single selection)

| Arguments: | depvar indepvars [ ; censvar ] |

| Options: | --exponential (use exponential distribution) |

| --loglogistic (use log-logistic distribution) | |

| --lognormal (use log-normal distribution) | |

| --medians (fitted values are medians) | |

| --robust (robust (QML) standard errors) | |

| --cluster=clustvar (see logit for explanation) | |

| --vcv (print covariance matrix) | |

| --verbose (print details of iterations) | |

| --quiet (don't print anything) | |

| Examples: | duration y 0 x1 x2 |

| duration y 0 x1 x2 ; cens | |

| See also weibull.inp |

Estimates a duration model: the dependent variable (which must be positive) represents the duration of some state of affairs, for example the length of spells of unemployment for a cross-section of respondents. By default the Weibull distribution is used but the exponential, log-logistic and log-normal distributions are also available.

If some of the duration measurements are right-censored (e.g. an individual's spell of unemployment has not come to an end within the period of observation) then you should supply the trailing argument censvar, a series in which non-zero values indicate right-censored cases.

By default the fitted values obtained via the accessor $yhat are the conditional means of the durations, but if the --medians option is given then $yhat provides the conditional medians instead.

Please see chapter 38 of the Gretl User's Guide for details.

Menu path: /Model/Limited dependent variable/Duration data

See if.

See if. Note that else requires a line to itself, before the following conditional command. You can append a comment, as in

else # OK, do something different

But you cannot append a command, as in

else x = 5 # wrong!

Ends a block of commands of some sort. For example, end system terminates an equation system.

See if.

Marks the end of a command loop. See loop.

| Options: | --complete (Create a complete document) |

| --output=filename (send output to specified file) |

Must follow the estimation of a model. Prints the estimated model in the form of a LaTeX equation. If a filename is specified using the --output option output goes to that file, otherwise it goes to a file with a name of the form equation_N.tex, where N is the number of models estimated to date in the current session. See also tabprint.

The output file will be written in the currently set workdir, unless the filename string contains a full path specification.

If the --complete flag is given, the LaTeX file is a complete document, ready for processing; otherwise it must be included in a document.

Menu path: Model window, /LaTeX

| Arguments: | depvar indepvars |

| Example: | equation y x1 x2 x3 const |

Specifies an equation within a system of equations (see system). The syntax for specifying an equation within an SUR system is the same as that for, e.g., ols. For an equation within a Three-Stage Least Squares system you may either (a) give an OLS-type equation specification and provide a common list of instruments using the instr keyword (again, see system), or (b) use the same equation syntax as for tsls.

| Arguments: | [ systemname ] [ estimator ] |

| Options: | --iterate (iterate to convergence) |

| --no-df-corr (no degrees of freedom correction) | |

| --geomean (see below) | |

| --quiet (don't print results) | |

| --verbose (print details of iterations) | |

| Examples: | estimate "Klein Model 1" method=fiml |

| estimate Sys1 method=sur | |

| estimate Sys1 method=sur --iterate |

Calls for estimation of a system of equations, which must have been previously defined using the system command. The name of the system should be given first, surrounded by double quotes if the name contains spaces. The estimator, which must be one of ols, tsls, sur, 3sls, fiml or liml, is preceded by the string method=. These arguments are optional if the system in question has already been estimated and occupies the place of the "last model"; in that case the estimator defaults to the previously used value.

If the system in question has had a set of restrictions applied (see the restrict command), estimation will be subject to the specified restrictions.

If the estimation method is sur or 3sls and the --iterate flag is given, the estimator will be iterated. In the case of SUR, if the procedure converges the results are maximum likelihood estimates. Iteration of three-stage least squares, however, does not in general converge on the full-information maximum likelihood results. The --iterate flag is ignored for other methods of estimation.

If the equation-by-equation estimators ols or tsls are chosen, the default is to apply a degrees of freedom correction when calculating standard errors. This can be suppressed using the --no-df-corr flag. This flag has no effect with the other estimators; no degrees of freedom correction is applied in any case.

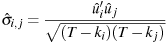

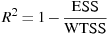

By default, the formula used in calculating the elements of the cross-equation covariance matrix is

If the --geomean flag is given, a degrees of freedom correction is applied: the formula is

where the ks denote the number of independent parameters in each equation.

If the --verbose option is given and an iterative method is specified, details of the iterations are printed.

| Argument: | expression |

| Examples: | eval x |

| eval inv(X'X) | |

| eval sqrt($pi) |

This command makes gretl act like a glorified calculator. The program evaluates expression and prints its value. The argument may be the name of a variable, or something more complicated. In any case, it should be an expression which could stand as the right-hand side of an assignment statement.

In interactive use (for instance in the gretl console) an equals sign works as shorthand for eval, as in

=sqrt(x)

(with or without a space following "="). But this variant is not accepted in scripting mode since it could easily mask coding errors.

In most contexts print can be used in place of eval to much the same effect. See also printf for the case where you wish to combine textual and numerical output.

| Variants: | fcast [startobs endobs] [vname] |

| fcast [startobs endobs] steps-ahead [vname] --recursive | |

| Options: | --dynamic (create dynamic forecast) |

| --static (create static forecast) | |

| --out-of-sample (generate post-sample forecast) | |

| --no-stats (don't print forecast statistics) | |

| --stats-only (only print forecast statistics) | |

| --quiet (don't print anything) | |

| --recursive (see below) | |

| --all-probs (see below) | |

| --plot=filename (see below) | |

| Examples: | fcast 1997:1 2001:4 f1 |

| fcast fit2 | |

| fcast 2004:1 2008:3 4 rfcast --recursive | |

| See also gdp_midas.inp |

Must follow an estimation command. Forecasts are generated for a certain range of observations: if startobs and endobs are given, for that range (if possible); otherwise if the --out-of-sample option is given, for observations following the range over which the model was estimated; otherwise over the currently defined sample range. If an out-of-sample forecast is requested but no relevant observations are available, an error is flagged. Depending on the nature of the model, standard errors may also be generated; see below. Also see below for the special effect of the --recursive option.

If the last model estimated is a single equation, then the optional vname argument has the following effect: the forecast values are not printed, but are saved to the dataset under the given name. If the last model is a system of equations, vname has a different effect, namely selecting a particular endogenous variable for forecasting (the default being to produce forecasts for all the endogenous variables). In the system case, or if vname is not given, the forecast values can be retrieved using the accessor $fcast, and the standard errors, if available, via $fcse.

The choice between a static and a dynamic forecast applies only in the case of dynamic models, with an autoregressive error process or including one or more lagged values of the dependent variable as regressors. Static forecasts are one step ahead, based on realized values from the previous period, while dynamic forecasts employ the chain rule of forecasting. For example, if a forecast for y in 2008 requires as input a value of y for 2007, a static forecast is impossible without actual data for 2007. A dynamic forecast for 2008 is possible if a prior forecast can be substituted for y in 2007.

The default is to give a static forecast for any portion of the forecast range that lies within the sample range over which the model was estimated, and a dynamic forecast (if relevant) out of sample. The --dynamic option requests a dynamic forecast from the earliest possible date, and the --static option requests a static forecast even out of sample.

The --recursive option is presently available only for single-equation models estimated via OLS. When this option is given the forecasts are recursive. That is, each forecast is generated from an estimate of the given model using data from a fixed starting point (namely, the start of the sample range for the original estimation) up to the forecast date minus k, where k is the number of steps ahead, which must be given in the steps-ahead argument. The forecasts are always dynamic if this is applicable. Note that the steps-ahead argument should be given only in conjunction with the --recursive option.